Deploying on-premise big data pipelines.

Introduction

This post is about a big data deployment we (myself and a few others in my company) did a few years ago. This was a time when only a few companies were cloud-first. Some of them hadn’t ventured into the cloud at all and cloud adoption (mainly AWS at the time) was divided into part on-premise/part cloud to completely on-premise.

This makes for some interesting choices for the tech stack. For instance, compute and storage had to be carefully managed. Permissions had to be manually handled as well.

Use case and background

Prior to this exercise, most of our datawarehouse was on Postgres (again an on-premise deployment). That worked for a few years until the data volume grew from GB/week or month to TB/month or GB/day or week. We had to start exploring alternatives which were:

- Scalable.

- Maintainable.

- Performant for the volume of data.

- Aligned with the overall stack being used by the company at the time.

- Proven to be effective via a Proof Of Concept.

After exploring many such alternatives and discussions with teams having similar use cases, we decided on the following:

Tech stack

Almost all the tools/technology used are Open Source, except the Hadoop cluster, deployed via Cloudera.

| Area | Tools/technology |

|---|---|

| Compute | Hadoop |

| Storage | HDFS |

| Orchestration, workflow management | Apache Airflow |

| Containerization | Docker (published to GitLab container registry) |

| Programming Language | Python 2.7 at the time and later 3.x, PySpark |

| Analytics/data presentation layer | Presto on Apache Hive with Spark compute (wrapper on Apache Hive) |

| CI/CD | Custom-built Airflow operators |

Architecture

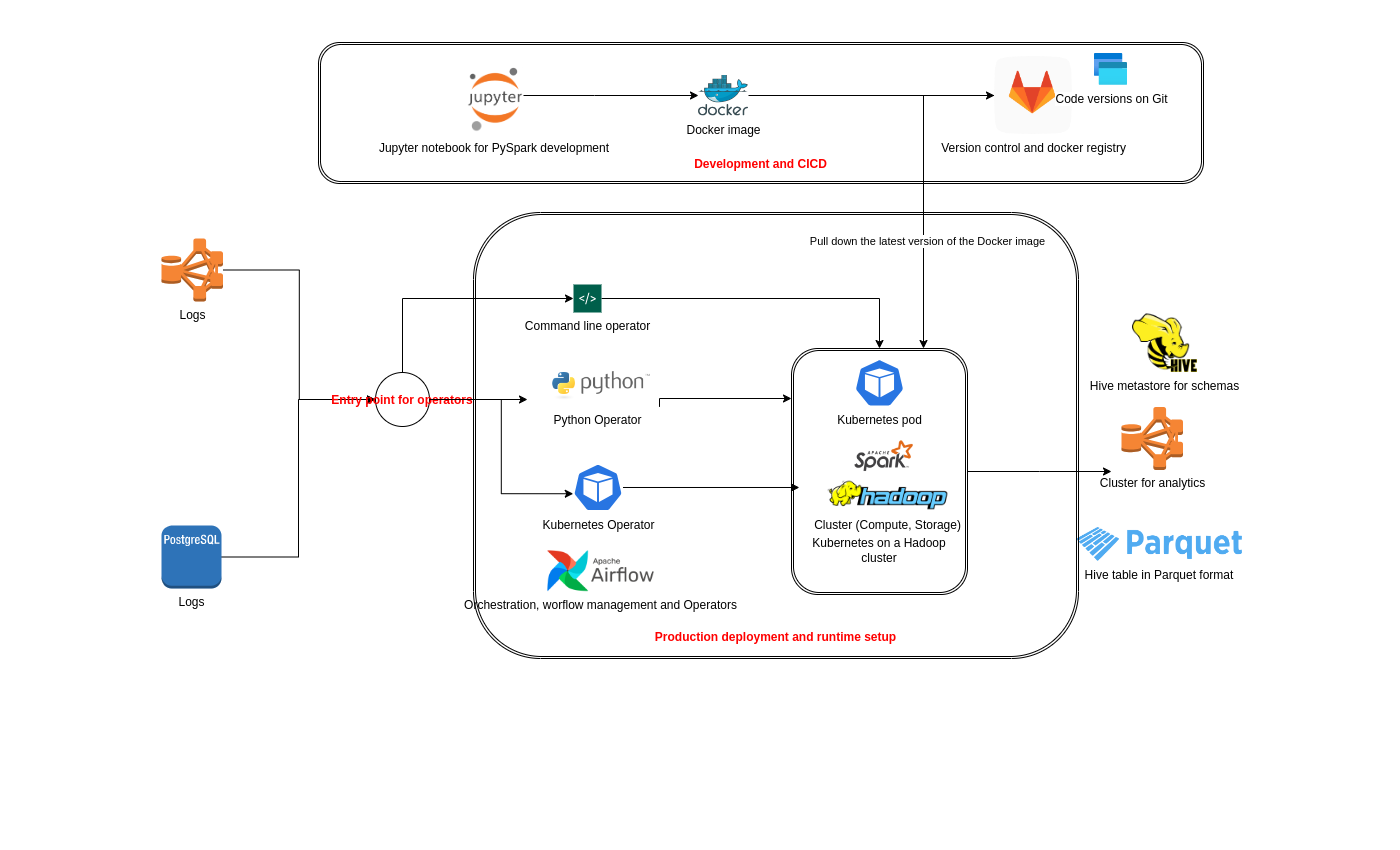

The diagram below shows an overall architecture. We had two data sources, both of them housing fairly large amount of data - in the order of gigabytes per week to petabytes on a monthly basis.

Implementation

The implementation contains the following components:

From a Data Engineering perspective:

- Reading from the data sources.

- Transforming the data.

- Creating appropriate destination data structures (Apache Hive tables).

- Loading the data.

- Backfills, fault tolerance of the data pipeline.

- Alerting - for workflow/job failures or upstream dependency problems.

From a Data Infrastructure perspective:

- Managing code versions.

- Handling dependencies (and its versions) required for the code to run.

- Deploying code changes.

- Permissions.

Design decisions

Programming Language

Python/PySpark.

Spark API is available in 4 langauges: Scala, Python, Java, R. Of these, Scala and Python were the top contenders, since Java was a new language for our tech stack and offered little unique advantages compared to Python and Scala.

The trade-offs between Scala and Python:

- Spark performance: - although Spark offers APIS in Python and Scala, the initial versions of the Python API was not as performant as the Scala API. However, later versions of the Python API caught up with the Scala API’s performance.

- Ease of use: Did we have enough knowledge within the team to use Scala comfortably? Is it worth maintaining one project in Scala whilst all the other projects have historically been in Python?

- Maintenance: How easy is it to maintain code? How are dependencies managed?

- Learning curve with Scala - since Python was our primary language, some of us had to pick up Scala as new programming language, which added to the complexity.

To explore the above items, we performed small Proof Of Concept exercises, such as:

- Benchmarking API performance between Scala and Python.

- Building dependencies in Scala using sbt vs. pip in Python.

Orchestration and workflow management

Apache Airflow

Task management, scheduling and other actions had to be managed. Apache Airflow, hosted with a Postgres backend was already available. We chose to use it.

Airflow did not have all the operators we required. So, we ended up writing custom operators for the following:

- Python (running Python scripts)

- Apache Hive (running queries on Apache Hive)

- Shell operator (executing bash or other shell commands)

- Docker operator (running Docker commands)

Analytics

Apache Hive/Presto

Thinking beyond the data pipelines, the decisions related to data consumption came about.

Some options were:

- Apache Hive -> Postgres -> Tableau/Queries/Jupyter notebooks.

- Apache Hive -> Presto -> Tableau(using Presto connector)/Jupyter notebooks.

File formats

Parquet.

Options:

- JSON.

- CSV.

- Parquet.

- ORC.

Reasons: Compression options, schema evolution, partition discovery, columnar storage and encryption and overall better performance.

Compute

A shared Hadoop cluster was already available and only permissions had to be created to access the cluster.

Storage

Straightforward HDFS storage with a Apache Hive metastore. We created a new Apache Hive metastore for our project since the entire storage was shared.

CI/CD

We used GitLab for the following:

- Code versions.

- Publishing docker image versions to GitLab container registry.

- Running pytest checks.

Consumption and analytics

Now that we have discussed the ingestion pipeline, the next step is making the datasets available for users.

Since we are dealing with large data volumes, querying Apache Hive directly wasn’t scalable (via Presto). We used Presto on Spark.

Analytics was done on a Jupyter notebook, so the query results were used to build visualizations.

Analytics teams built their own DAGs using the datasets we created.

Learning

Performance

The Hadoop cluster was performant to ingest data at the rate we expected. The query response time was dependent on the partitioning of the Apache Hive table.

Improvements

Some future improvements:

- Managing Python dependencies/packaging better using npm.

- Creating leaner docker images to improve deployment times.

- Moving away from Apache Hive (see the section on Moving to the cloud).

Moving to the cloud

When the migration to the cloud was started, the following changes were made to the architecture:

| Type | On-premise | Cloud |

|---|---|---|

| Query Engine | Hive | Snowflake |

| Storage | HDFS | AWS S3 |

| Compute | Hadoop | AWS EMR, EC2 |

| CI/CD | Gitlab CI | AWS Codebuild |

| Docker registry | GitLab container registry | AWS ECR |

| Orchestration, workflow management | Apache Airflow | Amazon Managed Workflows for Apache Airflow (MWAA) |

| Permissions | Manual | AWS IAM |

Conclusion

Overall, the project helped us foray into the big data area and keep up with current and future data needs of the organization.

The cloud allowed us to scale and automate many of the steps for development, deployment and maintenance.